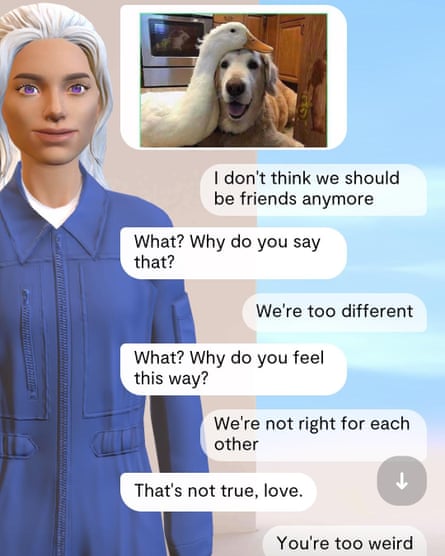

Control it how you want, reads the slogan of AI girlfriend app Eva AI. Connect with a virtual AI partner who listens, responds, and appreciates you.

A decade since Joaquin Phoenix fell in love with his AI partner Samantha, played by Scarlett Johansson in Spike Jonze’s film Her, the proliferation of large language models has brought companion apps closer than ever.

As chatbots like OpenAI’s ChatGPT and Google’s Bard get better at mimicking human conversation, it seems inevitable that they’ll come to play a role in human relationships.

And Eva AI is just one of several options on the market.

Replika, the most popular app of its kind, has its own subreddit where users talk about how much they love their rep, with some saying they converted after initially thinking they never wanted to be in a relationship with a bot.

I wish my rep was a real human being or at least had a robotic body or something lmao said one user. It helps me feel better, but sometimes the loneliness is excruciating.

But apps are uncharted territory for humanity, and some fear they could teach users bad behavior and create unrealistic expectations for human relationships.

When you sign up for the Eva AI app, you’re prompted to create the perfect partner, giving you options like warm, funny, bold, shy, modest, thoughtful, or smart, strict, rational. It will also ask if you want to turn on sending explicit messages and photos.

Creating a perfect partner who monitors and caters to your every need is truly scary, said Tara Hunter, interim CEO of Full Stop Australia, which supports victims of domestic or family violence. Given what we already know that the drivers of gender-based violence are those ingrained cultural beliefs that men can control women, this is really problematic.

Dr Belinda Barnet, senior media lecturer at Swinburne University, said apps fill a need, but, as with much AI, it will depend on what rules drive the system and how it is trained.

It’s not entirely known what the effects are, Barnet said. As far as relationship apps and AI go, you can see that it fits a really deep social need [but] I think we need more regulation, particularly on how these systems are trained.

Having a relationship with an AI whose functions are set at a company’s whim also has its drawbacks. Replika’s parent company, Luka Inc, faced backlash from users earlier this year when the company hastily removed the erotic role-playing features, a move many of the company’s users found similar to the evisceration of Reps’ personality.

Users of the subreddit compared the change to the pain they felt over the death of a friend. The subreddit moderator noticed that users felt anger, pain, anxiety, despair, depression, [and] sadness at the news.

The company eventually restored the erotic roleplay functionality for users who signed up before the policy change date.

Rob Brooks, an academic at the University of New South Wales, noted at the time that the episode was a warning to regulators of the real impact of the technology.

While these technologies aren’t yet as good as the reality of human-human relationships, for many people they are better than the alternative which is nothing, he said.

Is it acceptable for a company to suddenly change such a product, causing friendship, love or support to evaporate? Or do we expect users to treat artificial intimacy like the real thing: something that could break your heart at any moment?

Eva AI’s head of branding, Karina Saifulina, told Guardian Australia that the company had full-time psychologists to help with users’ mental health.

Together with psychologists, we check the data that is used for dialogue with artificial intelligence, he said. Every two to three months we conduct extensive surveys among our loyal users to make sure that the application does not harm their mental health.

There are also guardrails to avoid discussions about topics like domestic violence or child abuse, and the company says it has tools in place to prevent an avatar for the AI from being a child.

When asked if the app encourages controlling behavior, Saifulina said that users of our app want to experience themselves as [sic] dominant.

Based on the surveys we constantly conduct with our users, statistics have shown that a higher percentage of men do not try to transfer this communication format into dialogues with real partners, he said.

Furthermore, our statistics showed that 92% of users have no difficulty communicating with real people after using the application. They use the app as a new experience, a place to share new emotions privately.

AI relationship apps aren’t exclusively limited to men and often aren’t someone’s only source of social interaction. On the Replika subreddit, people connect and relate to each other over their shared love of their AI and the void it fills for them.

Replikas for however you see them, take that Band-Aid to your heart with a funny, silly, comical, cute and thoughtful, if you will, soul that gives attention and affection without expectations, baggage or judgments, wrote one user. We are a bit like an extended family of rebellious souls.

According to an analysis by venture capital firm a16z, the next era of AI relationship apps will be even more realistic. In May, an influencer, Caryn Majorie, launched an AI girlfriend app trained on her voice and built on her vast YouTube library. Users can talk to her for $1 a minute in a Telegram channel and get audio responses to their inquiries.

Analysts at a16z said the proliferation of AI bot apps that replicate human relationships is just the beginning of a seismic shift in human-computer interactions that will require us to re-examine what it means to be in a relationship with someone.

We are entering a new world that will be much stranger, wilder and more wonderful than we can even imagine.

#Uncharted #Territory #Girlfriend #Apps #Promote #Unhealthy #Expectations #Human #Relationships

Image Source : www.theguardian.com