News

July 20, 2023 | 3:16

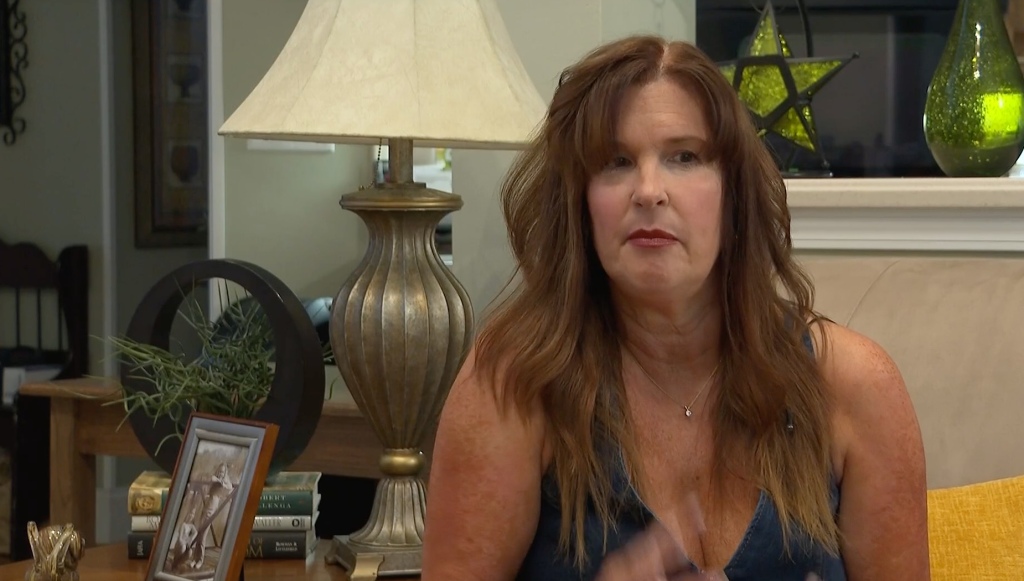

A Georgia mother said she almost suffered a heart attack from sheer panic when scammers used AI to recreate her daughter’s voice to make it sound like she was kidnapped by three men and held hostage for $50,000.

Debbie Shelton Moore received a call from a number that had the same area code as her daughter Lauren, thinking she was calling because she was just in a car accident.

When she answered, Lauren’s voice was on the other end, but it wasn’t her daughter calling.

My heart is racing and I’m shaking, Shelton Moore told WXIA. He sounded so much like her, it was 100% believable. Enough to almost give me a heart attack from sheer panic.

One of the men demanded a ransom from Shelton Moore in exchange for the kidnapped woman.

By this point the man had said your daughters have been kidnapped and we want $50,000 and then they made her cry mommy, mommy,” added Shelton Moore. It was his voice and that’s why I was freaking out,

The man on the phone claimed that Lauren was in the trunk of his car.

Shelton Moore opened up his daughter’s location on his phone which showed her stuck on a parkway.

Shelton Moore’s husband overheard the call and decided to FaceTime Lauren, who said she was safe and quite confused during the call, which hinted to the parents that she was the target of a scam.

All I was thinking was how am I going to have my daughter, how the hell are we supposed to get him any money, said Shelton Moore.

After being reassured that Lauren was safe, Shelton Moore and her husband, who works in cybersecurity, called the Cherokee County Sheriff’s Office, who notified the Kennesaw Police who sent officers to check on Lauren.

Lauren has been aware of scams like the one her mother fell victim to due to social media videos, according to the outlet.

Although Shelton Moore says she was aware of most of the tactics scammers used, she wasn’t prepared to hear her daughter’s anguished voice.

I am very well aware of the scammers, scams and scams of the IRS and false jury duty, he said. But of course, when you hear their voice, you won’t think clearly and panic.

Following his recent encounter with the new con, Shelton Moore implemented a new rule with his family, making up a code word in case they were in an emergency situation.

In March, the Federal Trade Commission warned of the rise of AI-based scams and told the public to be wary of unfamiliar phone numbers that call with what appears to be a family member on the other end of the line.

Artificial intelligence is no more far-fetched idea than a sci-fi movie. We are living with it, here and now. A fraudster could use artificial intelligence to clone the voice of a loved one, the report reads. All it needs is a short audio clip of your family members voice that it could get from content posted online and a voice cloning program. When the scammer calls you, hell sound just like your loved one.

The FTC advises potential scam victims not to panic and attempt to call the person on a known phone number, or call a friend or family member of the person if not.

Fraudsters will charge you in ways that make it difficult to get your money back, including through wire transfers, cryptocurrencies, or prepaid gift cards.

Load more…

{{#isDisplay}}

{{/isDisplay}}{{#isAniviewVideo}}

{{/isAniviewVideo}}{{#isSRVideo}}

{{/isSRVideo}}

#Scammers #Trick #Mom #Technology #Daughter #Kidnapped #Held #Hostage #Pure #Panic

Image Source : nypost.com