The advent of large language models (LLMs) has generated considerable interest among the public, especially with the emergence of ChatGPT. These models, trained on large amounts of data, can learn in context, even with minimal examples. This year, a paper presented at the Association for Computational Linguistics (ACL) meeting delves into the importance of model scale for contextual learning and examines the interpretability of LLM architectures.

The study focuses on the OPT-66B model, a 66 billion parameter LLM developed by Meta as an open replica of GPT-3. Analyzing OPT-66B, researchers sought to determine whether all components of the LLMs are essential for learning in context, with the aim of providing insights into potential areas for improved training.

The LLMs are built using the Transformer architecture, which is based on an attention mechanism. This mechanism allows the model to predict which earlier tokens in a sequence it should focus on when generating the current token. These LLMs use multi-head attention, employing multiple attention mechanisms in parallel. OPT-66B consists of 64 layers, each containing 72 attention heads. The multi-head attention output then passes through a separate feed-forward network (FFN) at each level.

To study the OPT-66B model, the researchers employed two methods. First, they assigned scores to each head of attention and FFN to determine their importance to a given task. Using these scores, they trimmed the model, discarding some components. Surprisingly, they found that a significant portion of the model could be removed without impacting performance. This suggested that OPT-66B, and potentially other leading LLMs, were untrained.

The researchers found that important attention heads resided predominantly in the middle layers of the model, while important FFNs resided mainly in the later layers. Amazingly, even after removing up to 70% (about 15.7 billion parameters) of the attention heads, the ability to perform zero or a few clicks contextual learning on 14 different datasets/NLP tasks (NLP) has remained largely unchanged. Furthermore, they identified a common subset of attention heads responsible for learning in context across tasks and shots, indicating task-independent functionality. Furthermore, they observed that approximately 20% of the FFNs (about 8.5 billion parameters) could be removed with minimal impact on learning in context with zero or a few hits.

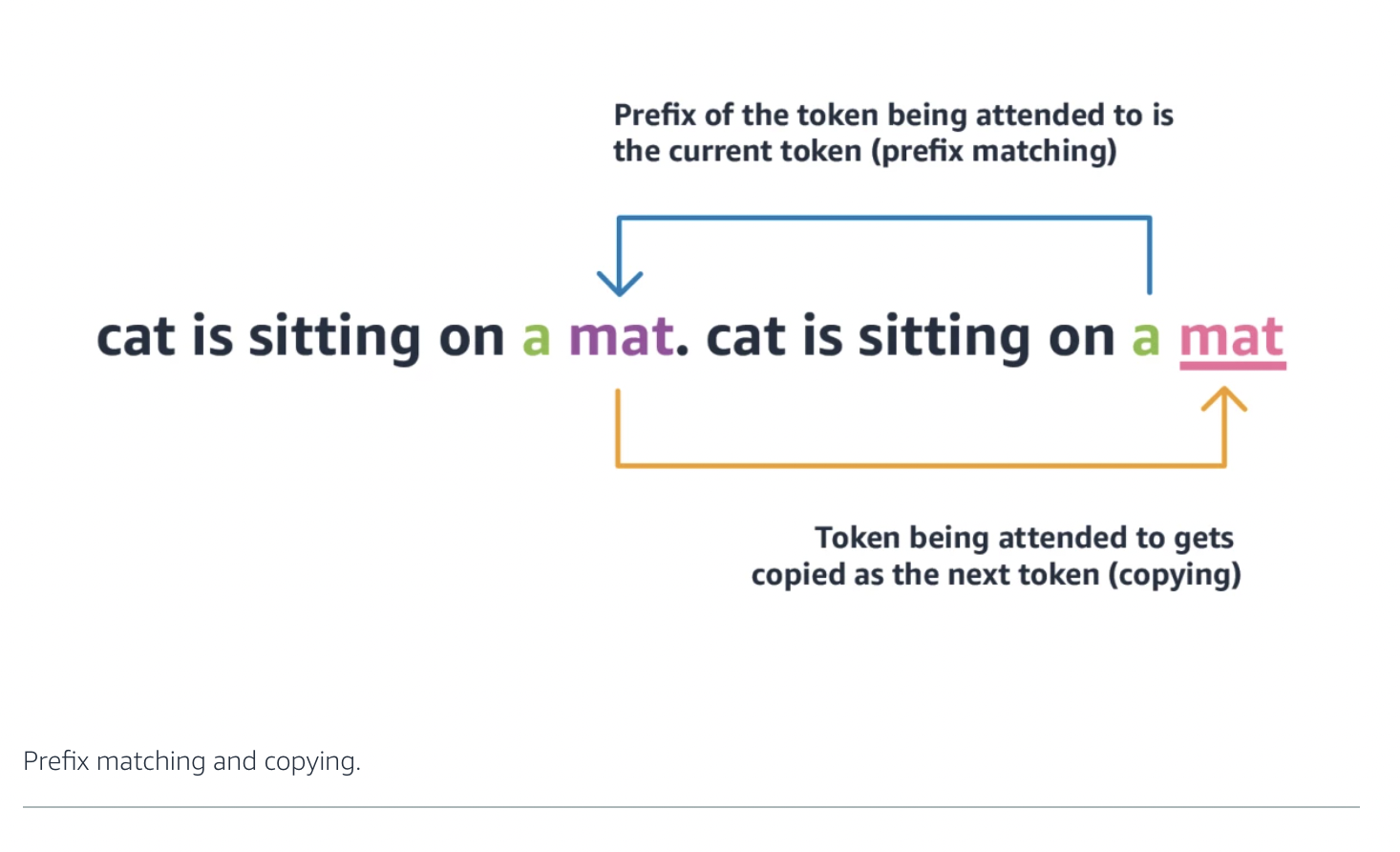

For their second analytic technique, the researchers assessed the ability of all attention heads in OPT-66B to perform primitive task-independent operations associated with in-context learning. These operations included prefix matching and copying, which involves looking for a previous occurrence of the current token and copying the next token. They found that a small group of attention heads showed nontrivial scores for both primitives. Interestingly, these heads also overlapped with attention heads identified as important for specific tasks, suggesting their involvement in more sophisticated in-context learning behaviors, such as matching latent concepts.

The study concluded that only a small group of attention and FFN heads seemed crucial to contextual learning, implying that OPT-66B, and potentially other major LLMs, were undertrained. This observation is in line with recent research questioning the effectiveness of fixed amounts of pre-training data when scaling models. The results suggest that both the models and the amount of pre-training data need to scale in tandem for optimal performance. Future investigations could explore how newer LLM variants, including those tailored to follow instructions, perform in similar analyses.

Check out theCharter and Blog.Don’t forget to subscribeour 26k+ ML SubReddit,Discord channel,ANDEmail newsletterwhere we share the latest news on AI research, cool AI projects, and more. If you have any questions regarding the above article or if you have missed anything, please do not hesitate to email us atAsif@marktechpost.com

Check out over 800 AI tools in the AI Tools Club

Niharika is a technical consulting intern at Marktechpost. She is a third year student, currently pursuing her B.Tech at Indian Institute of Technology (IIT), Kharagpur. She is a very enthusiastic individual with a keen interest in machine learning, data science and artificial intelligence and an avid reader of the latest developments in these fields.

#large #language #models #layers #research #unmasks #model #efficiency #finding #essential #components #large #language #models

Image Source : www.marktechpost.com