IBM announced its Watsonx software development stack in May and is starting to ship it to customers today. We wanted to know exactly what the hell it is. And so we reached out to Sriram Raghavan, vice president of AI strategy and roadmaps at IBM Research, to understand what Watsonx is and why it will be important for commercializing large language models.

Let’s talk about Why before What.

As Raghavan sees it, there have been four different waves of AI. And despite Big Blues’ best efforts with its Watson software stack, famously first used to play the Danger! game show and beat the human experts in February 2011, it was not a huge commercial success in the enterprise for the first two waves.

But with the current wave of artificial intelligence, Raghavan says, businesses, governments and educational institutions, many of which are customers of IBM systems and system software, won’t lose the boat this time around.

Most businesses were certainly left standing on the IT docks with the early era of AI, with expert systems that were described and built nearly sixty years ago, most notably the Stanford Heuristic Programming Project led by Edward Feigenbaum. These systems consisted of giant trees of if then statements that included a rules engine, backed by a knowledge base, and an inference engine that applied rules to that knowledge base to make decisions within a narrowly defined field, such as medicine. This was a very semantic and symbolic approach with a lot of human coding.

Statistical approaches to artificial intelligence have been around since the inception of Alan Turing’s famous 1950 paper, Computer machines and intelligence, from which we get the Turing test, but for a long time they took a back seat to expert systems due to lack of data and lack of processing to chew through that vast data. But with machine learning in the 2010s the second era of AI statistical analysis of huge datasets was used to find relationships between the data and automatically create the algorithms.

In the third era of artificial intelligence, deep learning, neural network algorithms that somehow mimic the functioning of the human brain, which is itself a statistical machine if you really want to think about it (and even if you don’t) were being used to abstract input data and the deeper the networks, which means the number of levels of convolutions and the number of transformations is deep.

In the fourth era, which includes large language models, what are often called basic models, this transformation and deepening is taken to an extreme, as is the hardware that executes it, and therefore the emergent behavior we are seeing with GPT, BERT , PaLM, LLaMA and other models of transformers.

Personally, we don’t see them as epochs at all, but rather as stages of growth, all related to each other and on a continuum. A seed is just a small tree waiting to be born.

However you want to draw the lines, as Raghavan does or not, transformers are championing large language models (LLM) and deep learning recommendation systems (DLRM), and these in turn are transforming business. And more general machine learning techniques will also be used by businesses for image and word recognition and other tasks that are still important.

Baseline models enable generative AI, Raghavan says The next platform. But interestingly, the baseline models also greatly accelerate and improve what you can do with non-generative AI and it’s important not what they will do for non-generative tasks. And the reason we’re bringing Watsonx together is that we want a single enterprise-grade hybrid environment where customers can leverage both machine learning and underlying models. That’s what this platform is all about.

Getting IBM or any other software vendor to be specific about what is and isn’t in its AI platform is tricky. We are still the only ones who have actually divulged how the original Watson system was built. It was essentially an Apache Hadoop cluster for storing approximately 200 million pages of text married to the Apache UIMA data management framework plus over 1 million lines of custom code for the DeepQA question and answer engine, all running on a rather modest Power7 cluster with 2,880 cores and 11,520 threads and 16TB of main memory. Something that is less powerful than a single GPU is today, albeit with much less memory. (At least two orders of magnitude less, to be exact, and this is not precisely progress in terms of the relationship between computation, memory capacity and memory bandwidth.)

The Watson AI stack has gone through many iterations and permutations in the last decade since the machine of the same name beat humans, and this new Watsonx stack will work with many of those existing tools, such as the Watson Studio development environment.

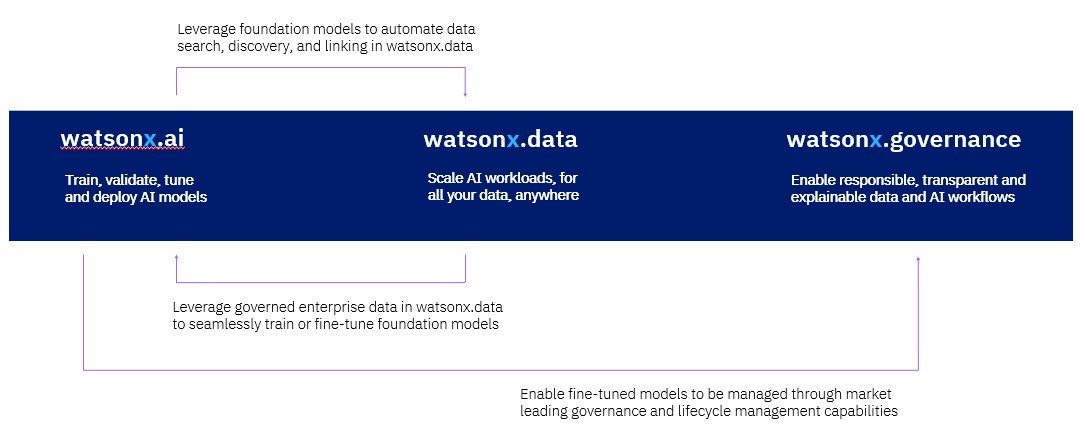

There are three parts to the Watsonx stack.

The first is Watsonx.ai, which is a collection of core models that are either open source or developed by IBM Research. The stack currently has more than twenty different models that IBM Research has put together and they are grouped using the names of metamorphic rocks: Slate, Sandstone and Granite.

The Slate models are the classic encoder-only models, Raghavan explains. These are the models where you want a base model, but you’re not doing generative AI. These templates are great at just doing entity classification and extraction. They’re not generative assets, but they’re fit for purpose and there’s a number of use cases where it will work. Since Sandstone models are coding/decoding models, they lead you to a good balance between generative and non-generative activities. And for large enough Sandstone models, you can do a quick engineering of all the cool stuff you’ve heard of with ChatGPT. And then Granite will be our decoder-only models.

The Watsonx.ai stack is API compatible with the Hugging Face transformer library, which is written in Python, for both AI training and AI inference. This support of the Hugging Face API is critical because the number of models written to Hugging Face is exploding and this API is becoming something of a portability standard. Future-thinking customers won’t be using Virtex on Google or SageMaker on Amazon Web Services, but rather look for a consistent model that can be deployed internally or on any cloud.

PyTorch is the underlying AI framework for Watsonx. IBM has doubled down on both the Python language and the PyTorch framework, Raghavan said, and is working closely with Meta Platforms now that PyTorch has been released into the Linux Foundation.

With PyTorch, we’re working to dramatically improve training efficiency and reduce inference costs, says Raghavan. We can demonstrate that on a pure cloud native architecture, with standard Ethernet networks and no InfiniBand, basic models with 10 or 15 billion parameters can be trained quite well, which is great because our customers care about cost. Not everyone can spend $1 billion on a supercomputer.

I am not joking.

IBM Research has assembled a dataset to train its basic core models that has over 1 trillion tokens, which is a lot by any measure. (A token is generally a fragment of a word or a short word of about four characters in the English language.) Microsoft and OpenAI are said to have a data set of about 13 trillion tokens for GPT-4 and 1.8 trillion parameters. Google’s PaLM has 540 billion parameters and Nvidias MegaTron has 530 billion parameters, by comparison; we don’t know the size of the dataset. IBM customers don’t need something that big, says Raghavan.

There are many customers who want to take a base model and add 100,000 documents to get their own base model to adapt to their use cases. We want to be able to enable them to do this at the lowest possible cost, so we’re working with the Python community and Hugging Face on both inference and training optimization. We also have a partnership with the Ray community which is very good for data pre-processing, benchmarking and validation. We’ve also been working between IBM Research and Red Hat to bring all of this to OpenShift, and are working with the PyTorch community to improve its performance on Kubernetes and improve how we checkpoint for object storage.

The second piece of the IBM AI stack is Watsonx.data, which IBM bills as a replacement for Hadoop. It’s a mix of Apache Ranger persistent storage, Spark in-memory data, Iceberg for table formats, Parquet for column formats with writes and reads, and Orc for heavy reads on data optimized for SQL-like Hive interface for data warehouse overlaid on Hadoop and the Presto distributed SQL environment. (Hive and Presto come from the former Facebook, now Meta Platforms, just like PyTorch does.)

The third piece of the Watsonx stack is Watsonx.governance, and as a provider of enterprise software and systems, IBM is as passionate about governance and security as its customers are. (Our eyes glaze over when it comes to governance, but we’re thankful just the same. . . . )

Here’s the thing: This could Finally be the Watson AI stack that Big Blue can sell.

Way back in 1998, when the dot-com boom was just starting to go crazy, IBM was managing the IT infrastructure for the Winter Olympics in Nagano, Japan. At the time, the Apache web server was three years old, didn’t scale well, and wasn’t exactly stable. Big Blue needed it to be, and so he brought it up. Soon after, that Apache Web server was bundled with Java Application Service Middleware, and IBM’s enterprise customers lined up and bought the product called WebSphere on many of their machines. WebSphere gave Oracle WebLogic and RedHat JBoss a run for the money for two and a half decades and gave IBM tens of billions of dollars in sizable revenue and profits during that time.

Big Blue clearly wants to repeat that story.

#Companies #fourth #wave #artificial #intelligence

Image Source : www.nextplatform.com